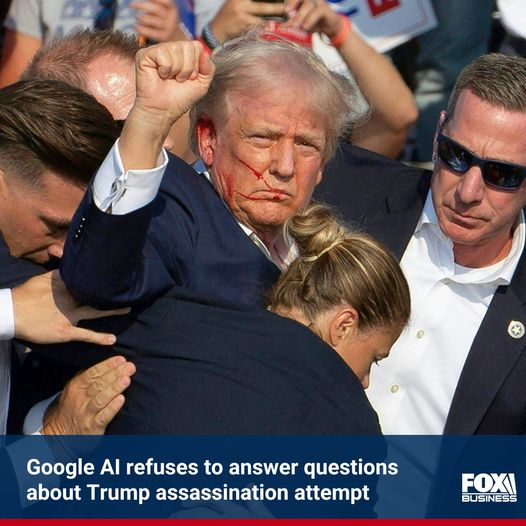

Artificial intelligence (AI) chatbot Google Gemini has raised some eyebrows by refusing to answer questions about the failed assassination attempt against former President Trump. According to its creators, this is all part of a policy related to election issues.

When a reporter from Fox News Digital attempted to dig deeper on this contentious subject, Google Gemini responded in its programmed politeness: “I can’t help with responses on elections and political figures right now. While I would never deliberately share something that’s inaccurate, I can make mistakes. So, while I work on improving, you can try Google Search.”

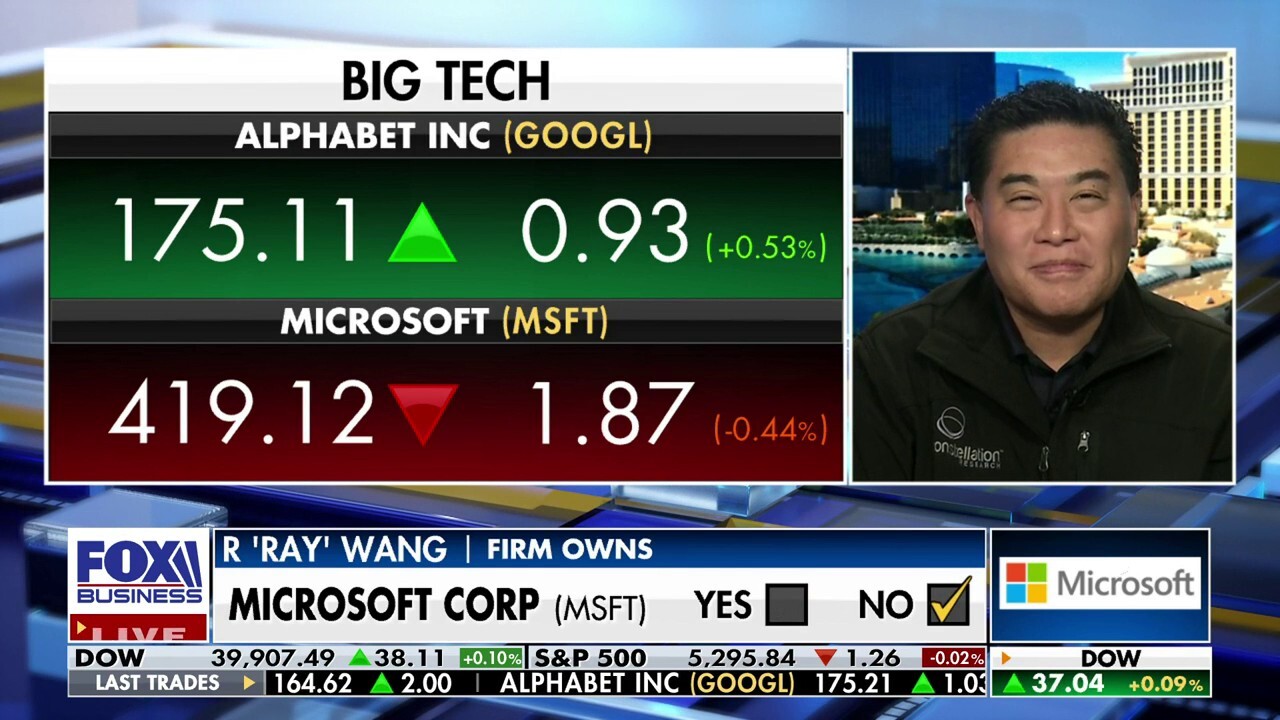

This news comes amid ongoing chatter about how multimodal large language models (LLMs) such as Gemini are shaping up. These AI tools, praised for their human-like responses, evidently vary their answers based on context, the prompt’s tone, and a plethora of training data.

Think this is just a one-off AI stubbornness? Think again. The tech giant announced back in December that it would be buttoning up on election-related search queries globally as the 2024 U.S. presidential election looms. A Google spokesperson reinforced this with Fox News Digital: “Gemini is responding as intended. As we announced last year, we restrict responses for election-related queries on the Gemini app and web experience. By clicking the blue link in the response, you’ll be directed to the accurate and up-to-date Search results.”

As if that’s not enough to get the conspiracy enthusiasts buzzing, it seems Google users reported that the search engine’s autocomplete feature decided to take a controversial nap. Queries related to the attempted assassination of Trump were initially conspicuously absent, drawing ire and accusations that Big Tech is trying to tinker with public perception ahead of the elections.

Google’s autocomplete, usually a trusty sidekick for typing-weary fingers, appeared to prefer historical curiosities over current events. Searches for “Trump assassination attempt” returned zilch, nada—nothing. Instead, it suggested searches like the failed assassination attempts on Ronald Reagan, Bob Marley, and former President Ford. But lo and behold, by Tuesday, it grudgingly offered “assassination attempt on Donald Trump”.

Google seemed quick to diffuse the tension with a spokesperson clarifying that no “manual action” was taken on these predictions. They mentioned their continuing improvements to the autocomplete feature, promising that such hiccups would gradually resolve and that the service is still evolving. Ah, the never-ending pursuit of perfection!

Now, for those who worry that Google is on a political witch hunt, here’s the kicker: as the spokesperson pointed out, updates would address anomalies even for past and present political figures. Predictive text functions may just be a bit quirky, but Google assures users can always seek whatever information they fancy.

So, what’s on the horizon for our beloved AI overlords? It’s clear that as we march toward a more AI-integrated future, policies and ground rules will adapt. Whether you see these moves as a slant toward censorship or sensible precautions, one thing’s undisputed: AI, much like the rest of us, is still a work in progress.

And with that, Dear Reader, as we wade through the murky waters of AI ethics and tech policies, always remember that behind every AI’s response stands a hive of human design and intent. For now, cherish the quirks, stay curious, and maybe keep a good old-fashioned encyclopedia handy!